Running or managing a large ecommerce website is no mean feat. Site speed and performance, conversion efficiency and stocking issues become critical concerns for ecommerce at scale. One common issue we see with every ecommerce site that comes to us for help with their SEO is that of inconsistencies with indexing.

What is Indexing?

Indexing is the whole process of collecting, parsing and storing web pages (and information about web pages) so that these pages can be retrieved quickly by the search engine. Search engines index pages, images, videos and additional data about websites found by search engine spiders and crawlers. On occasion there may be pages on your website that are unsuitable for indexing such as a login page, or your XML sitemap, in which case you can instruct the search engines to ignore these pages. Common ways to do this are via your site’ robots.txt file, or using a meta tag “noindex” within the <head> section of the HTML page that you do not want to be indexed.

You should endeavour to ensure that all of the pages that you would like people to be able to find are indexed, thus increasing your chance of having pages from your site appear in search results.

Problems with Indexing

A number of issues can contribute to incomplete indexing though particularly common with larger ecommerce sites are:

- Duplicate or substantially similar content

- Crawl errors

- Poor architecture/internal linking

Before we examine each of these common causes of incomplete indexing, we need to first confirm our hypothesis that there is a problem with indexing.

Diagnosing Incomplete Indexing

The first stage in identifying incomplete indexing is to know how many pages on your site should be indexed. Your XML sitemap is the best place to start as this should contain all the pages on your site that are desirous to index. If you don’t yet have an XML Sitemap this in itself could be part of the problem, as this is one of the best methods to inform search engine spiders which pages on your website you want them crawl and index. All the major search engines support XML Sitemaps, when created using the Sitemap protocol.

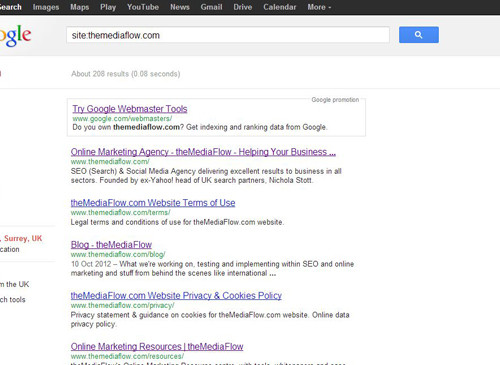

Once you have generated a Sitemap submit it to the search engine via Google (or Bing) webmaster Tools. Assuming you already have successfully submitted an XML Sitemap, you need to check to see if there’s an inconsistency between the number of URLs in the Sitemap, versus the number indexed.

Figure 1: Sitemaps in Google Webmaster Tools

Another first-port-of-call is within the >Health >Index Status option within Google Webmaster Tools, which will show you the total number of indexed URLs from your site, held in the Google index. On the Index Status page, click the button “Advanced” to get a breakdown of all URLs crawled, not selected and blocked by robots (i.e. in your Robots.txt file).

Figure 2: Index Status

There may be a good reason for a substantial difference between URLs crawled and URLs indexed such as:

- Individual blog comments may have a URL and not warrant indexing individually

- Images may have a URL and may not warrant indexing

- Pages may include the meta “noindex” tag

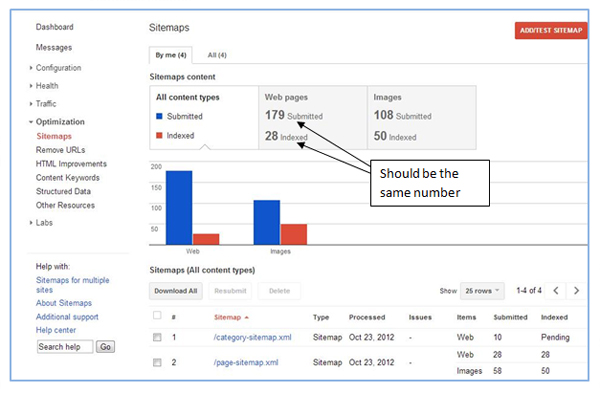

Additionally you can use a specially designed spider such as Screaming Frog, which can spider your site much as Googlebot or Bingbot will, and by default will crawl all the pages (images and other index able items) that should be indexed.

Figure 3: Screaming Frog SEO Spider

Once you have established the number of pages that you desire to be indexed you can then check that against the index in a couple of ways:

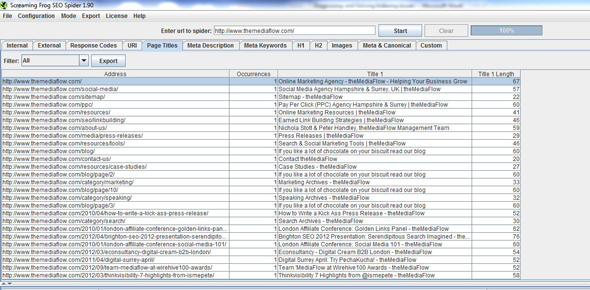

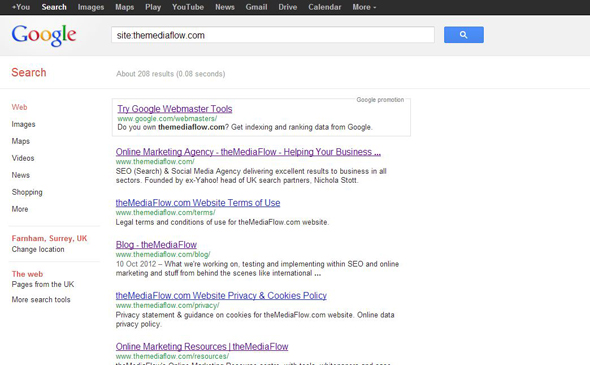

Using a Domain-restrict Advanced Query Operator

In the search box enter [site:] then immediately enter the URL of the site you want to check. This will then tell you how many pages there are in the search engine index for that site.

Figure 4: Site Index in Google

It is always best to double check this number in your webmaster tools, as there can be occasional inconsistencies depending on location and data centre. In which case when in Google Webmaster Tools go to >Optimization>Sitemaps, to check if there’s a difference between pages submitted and pages indexed. If there’s a tangible difference between the number of pages in your sitemap or own records, and the number of pages indexed then it is likely you have a problem with indexing. Let’s examine the more common causes:

Duplicate or Substantially Similar Content

Duplicate or substantially similar content is an issue that is so common with large ecommerce sites that we recommend planning to circumvent this happening at the site build stage. The problem occurs naturally due to the nature of many ecommerce sites that sell multiple versions of the same product. If your site architecture is designed to have one product per page, search engine crawlers may discern little to no difference from page to page and therefore only index a portion of your product level pages.

Ideally you should architect your site so that a lead product within a group is the default landing state for a page and then detailed attributes such as size or bit-head type could be selected by drop-down or similar selection method on-page.

You should also try the following:

- Differentiate and add as much unique content to product level pages as is reasonable

- Ensure URL structure and on-page mark-up is used correctly to “label” the differentiated aspects

- Use the rel=canonical attribute to indicate a preferred URL within a group of common product pages

Using rel=Canonical

A much needed solution for ecommerce sites, the ability to canonize a page can solve a lot of indexing problems that are attributed to duplicate of substantially similar content.

From Google “If Google knows that these pages have the same content, we may index only one version for our search results. Our algorithms select the page we think best answers the user’s query. Now, however, users can specify a canonical page to search engines by adding a <link> element with the attribute rel="canonical" to the <head> section of the non-canonical version of the page. Adding this link and attribute lets site owners identify sets of identical content and suggest to Google: “Of all these pages with identical content, this page is the most useful. Please prioritize it in search results.””

However the canonical tag can be pretty devastating if used incorrectly, for example in a misguided attempt to funnel page equity to a single page such as in adding a rel=canonical link to the homepage, from all other pages on a site; which may result in all other pages being de-indexed.

Crawl Errors

Common crawl errors can occur when a request for your page does not return a 200 “OK” Status. If a page has not been returned correctly, then it cannot be indexed. Common crawl errors include;

- Incorrect or circular redirections

- Server errors/slow sites

- Poor code

- Page not found (404)

You can access your crawl errors within Webmaster Tools and get URL-level detail by performing a “Fetch as Googlebot” on a URL that is generating a crawl error.

When it comes to larger ecommerce sites a very common problem is that of products going out of stock and pages for such products being allowed to 404. This can then lead to false indexing issues if you are not up to date on the status of stock and genuine pages, versus pages that should be indexed.

When it comes to ecommerce sites, a healthy presence in the search engine index and a good user experience we would recommend considering the following:

- Change the product landing page to label the product “Out of Stock”, and provide links to similar products

- Set automatic 301s to a canonical product page when a product in a group of similar products goes out of stock

- Allow the page to 404, update the sitemap, and correct internal references to the out of stock product URL (best used when no similar products exist)

Poor Architecture or Internal Linking

Search engine crawlers travel via links, meaning that the more links you have to a page, the greater the chance of it being crawled and indexed. Sites that are poorly architected with many navigational steps between home and product level pages can lead to some unlinked and unloved product level pages remaining outside the index.

Ideally this problem should be overcome at the outset with a best-practise information architecture that uses a flat and wide structure so that pages are no further than two or three levels of navigation from the homepage. Additional interlinking techniques include:

- Linking to popular categories directly in the footer

- Adding popular products, hot items or similar widgets on all pages

- Having a sitemap page available in the footer that links to all product pages (within reason), or lead products for very large sites

Other ways to assist the search engine bots when your site architecture is sub-optimal include:

- Your XML Sitemap

- Fixing any and all crawl errors

These are just a handful of tools and ways to diagnose indexing issues, as well as common causes and solutions. Some indexing issues are caused by rather complex issues such as bot loops, problems with query parameter URLs, cloaking or similar bad-practise. In such cases we would always advise a webmaster to seek a good quality SEO agency to assist in a clean and quick resolution.